This post was originally posted on the closed ALT members list, it seemed to generate interest so I’ve reproduced it here.

The power of little data

Most of what I’ve come across in terms of Learning Analytics is thinking in terms of big data, an example problem: We’re tracking our 300 first years, given the hundreds of measures we have on the VLE and hundreds of thousands of data points, can we produce an algorithm that uses that big data to identify those in danger of dropping out and how should we best intervene to support them. Fine, a worthy project.

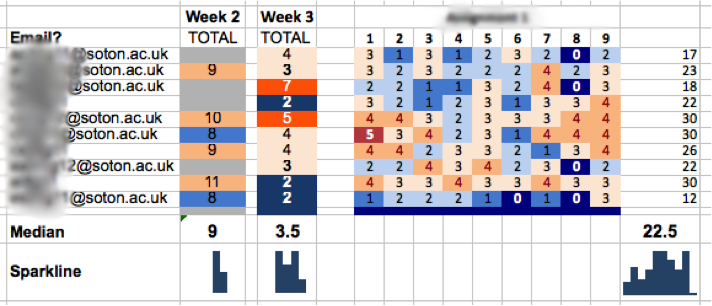

However, it isn’t the only way of tackling the problem. Could we visualize key milestones, say a count of how many of the key course readings a student has accessed each week so that the tutor can easily scan the students’ progress? It would look a bit like this:

At a glance, the tutor can identify non progressing students, she should then be able to drill down for more data (e.g. how much an individual student has been posting in forums) and intervene with the students in whatever way they think best.

The problem becomes one of deciding what key measures should be used (you could even make it customizable at the tutor level so THEY choose the key measures) and how to visualize it successfully. I haven’t seen any VLE learning analytics dashboard that is up to the job IMHO (although I haven’t done a proper search). When compared to big data LA I think this ‘little data’ approach is:

– more achievable,

– has less ethical implications and

– neatly side steps the algorithm transparency problem: its the tutor’s expertise that is being used and that’s been going since teaching was introduced.

The screenshot comes from a prototype system I built to help track progress on a flipped learning course I used to deliver at Southampton Uni, I found that telling the students I was tracking their progress proved an excellent motivator for them to keep up with the out of class activities. Little data and flipped learning was the topic of a talk I did in 2014 at Southampton Uni.

Over the last few days I’ve been playing around with

Over the last few days I’ve been playing around with